I was perusing an article about driverless racing cars and how the technology is leading innovation which will spin out to your average driverless family car.

Some of it was amazing, including an autonomous vehicle attempting the Goodwood Hill Climb. Apparently it set a record of 66.96 seconds which is just about 12 seconds slower than some of the fastest manned vehicles on that famous climb.

The vehicle called DevBot 2.0 has six cameras, two radars, 18 ultrasonic sensors and 5 lidars (a radar principle detector which uses a laser light source rather than microwaves). Quite a beast then and encouraging to see this level of high tech in action.

Bryn Balcome, Roborace’s chief strategy officer shares with us that using DevBot 2.0 gives ways to assess the quality of sensors and cameras which autonomous vehicles will rely on. Although he would not be too happy testing performance limits on real roads, me neither. I wonder what the police would do faced with a speeding autonomous vehicle?

So, it’s all about the sensors it would seem. Whether to use a diverse range or as Elon Musk of Tesla asserts – cameras can do the job alone. To that end, Mobileye, owned by one of the largest chip manufacturers Intel are building a camera only autonomous vehicle.

I am not so sure, looking at all those ‘sunlit uplands’ PR videos of the brave new world of autonomous vehicles, I haven’t seen one shot in the snow, rain, freezing rain or fog (OK the last one I probably couldn’t see anyway). Why is this I hear you ask? Well, cameras clogged with snow aren’t too good at seeing. Even if they aren’t clogged, I think you’d find the processing time of images goes through the roof with all those snowflakes drifting past. Would lidar work in the fog or snow? Surely the laser beam would be blocked or attenuated? Would infrared sensors see people? Well, in the cold, we all insulate ourselves but leave quite a hot spot – our faces. Could an infrared detector tell the difference between a face and perhaps the hot disc brake of a decelerating vehicle?

A friend of mine bought a brand new car, one of the first with ultrasonic reversing sensors. Very pleased with it he was, until he reversed into a sticking out scaffolding pole which pushed in his rear window. Moral…..never place reliance on a single style of sensor. Autonomous vehicles, because of adverse weather conditions, may be making poor decisions based upon limited sensory input.

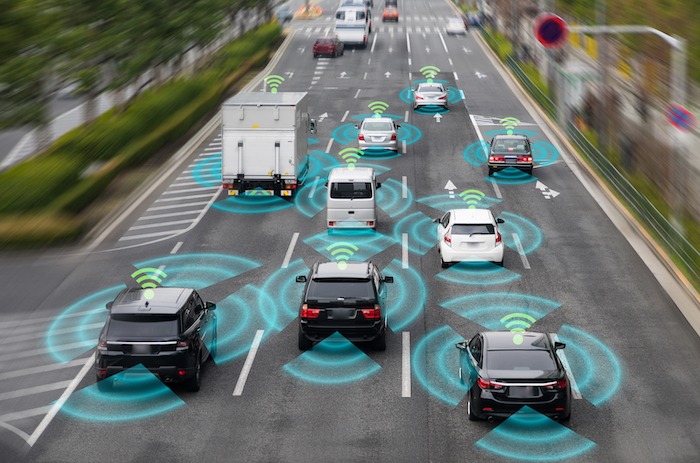

Looking at other PR videos, we see CGI of autonomous vehicles, whether in the same lane or as oncoming traffic. What hasn’t been tried (to my knowledge) as there aren’t sufficient autonomous vehicles is how these sensors work with another car’s sensors potentially interfering with them. Is there an infinite spectrum available for radar use, lidar use too? Anyone trying to set up lane specific radar speed sensors across a multi lane motorway will catch my drift on this one. Would the camera sensors have to take account of the wavelength of the lasers used in lidar and have suitable filtering?

One worrying snippet of data was….humans drive at least eight million hours before a misidentification accident occurs. At the moment, autonomous vehicles only manage 10,000 – 30,000 hours.