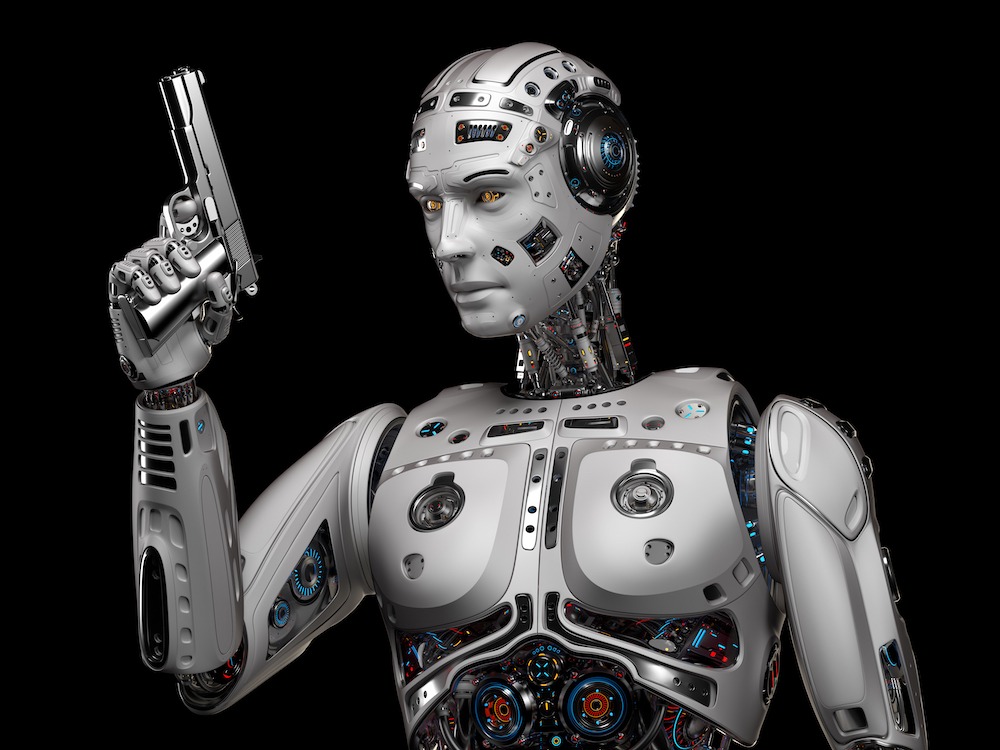

It has been reported that the Pentagon is seeking a military ethicist to help guide the moral maze algo of AI battlefield assets where humans may be removed from decision making processes.

It seems that China and Russia has stolen a march over most countries in the field of AI equipped battlefield weaponry and pundits consider they may not be as concerned over their AI morals.

So, it made me wonder if an ethically equipped AI warfare machine would have a disadvantage when faced with a morally depraved warfare machine. I think the extra processing time needed in coming to an AI morally justified decision would see the speedy termination of the ethical machine. To that end, would a country seeking an ethical killing machine also incorporate an ethics override switch when the chips were down? I guess so.

Don’t forget, man has mused over the moral justification of going to war for centuries. If you feed in all of the reasons, accidents and outcomes of wars gone by into an AI learning algo, I wonder what ethical justification for a future war would result?